The Confusion Matrix

Define success for AI projects

The Greek sphinx challenged people to confusing riddles and ate them when they answered wrong. In much the same way, artificial intelligence algorithms can behave in confusing, non-deterministic manners. Chances are that AI impacts your business, but it’s easy to get caught in the hype without understanding if AI is helping achieve your product’s goals. Don’t get lured into the sphinx’s trap without deciphering its riddles.

Product managers can use a statistical matrix to define success for AI projects.

From Deterministic to Probabilistic

A decade before generative AI became mainstream, machine learning was (and still is) used for predictive modeling. Like generative AI, ML algorithms can feel like a black box—we feed them inputs, and they provide us with outputs. This isn’t inherently a problem, as we can use and measure algorithms without knowing their inner workings. Consider a basic calculator. Even if we can’t solve 123 x 456 in our heads, we can trust the algorithm’s result to be the same every time because the algorithm is deterministic.

In other words, deterministic means that a set of inputs always returns the same outputs. Probabilistic means that a set of inputs does not always return the same outputs.

Ironically, the inexactitude of AI algorithms is their primary feature. We live in a complex and uncertain world, and our most critical problems don’t have straightforward solutions. Product management is the art of solving problems in nebulous environments, and the confusion matrix is our tool to measure AI’s effectiveness at solving these problems.

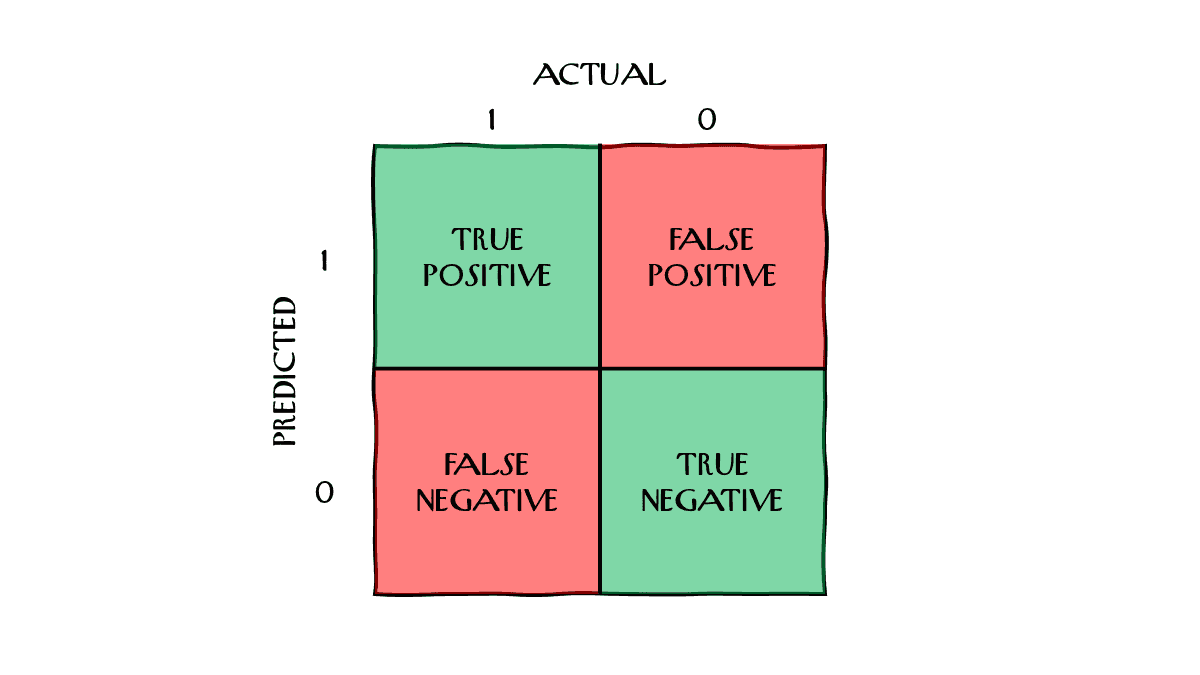

The Confusion Matrix

Given a dataset with true/false labels of predicted and actual values, you can measure the effectiveness of a classification algorithm with a confusion matrix. It’s a simple two-by-two grid with four classifications:

- True Positive (TP) = correct about a condition’s presence

- True Negative (TN) = correct about a condition’s absence

- False Positive (FP) = incorrect about a condition’s presence

- False Negative (FN) = incorrect about a condition’s absence

Adding context to the labels can make a confusion matrix less confusing. Consider a screening test for an emerging virus that makes people speak in riddles, SPHINX-24:

- TP = detected SPHINX-24

- TN = no SPHINX-24

- FP = false alarm for SPHINX-24

- FN = did not detect SPHINX-24

Contextualizing the accuracy of an AI algorithm in layman’s terms can ground it, making its results accessible to people regardless of background. A product manager can create specific metrics with these four values, depending on their goals.

Confusion Metrics

We could set goals around the four values directly. Any algorithm should aim to reduce false positives and negatives, but different use cases may prioritize one.

If trying to reduce the spread of a novel virus, decreasing the number of false negatives is a meaningful goal. If a person is incorrectly flagged as having the virus (a false positive), they must quarantine for a week. While disruptive to their routine, a healthy person quarantining is better than an unhealthy person spreading an unknown virus to dozens of others.

In email spam detection, reducing the number of false positives is a better goal. If a person receives a spammy email (a false negative), they can simply delete it. But if a spam filter incorrectly stops a critical email—such as a tax document—a person could incidentally break the law. Letting a few spams through the filter is preferable to blocking legitimate messages.

Other use cases could require a balance. In fraud detection, businesses need to strike a balance between loss prevention (reducing false negatives) and customer friction (reducing false positives), so metrics like F1 score or area under the curve (AUC) could help optimize between two goals. Although complicated to calculate, these metrics emerge from the four values in the confusion matrix.

Even if a product manager must rely on a data scientist to calculate AUC, understanding the confusion matrix sets the foundational knowledge required to set sound product objectives.